Sunday, August 26, 2007

Web 2.0 Gap Cast

I wanted to test out the system, and I created a 10 second proof of concept - just click here. For me, this model doesn't fit how I would use the system (I rather write/read than talk/video). However, if I were traveling across the globe and didn't have a computer, this would be a great way to give friends updates as to my activities. In most countries a phone is easily acquired, certainly it is easier than a computer and Internet connection. I would also imagine in terms of real-time information, this would be handy. Certainly on a college campus news of a great party will spread even more quickly!

With my up and coming travel I will keep this tool in my Web 2.0 toolkit. I know that I will be traveling to locations where connecting to the Internet will be difficult at times. However, with GapCast, its just a phone call away.

Web 2.0 Browsing with Tags - Delicious

Web 2.0 Technorati

Technorati is a search engine for blogs. The need to search blogs will increase as more meaningful information is posted. I tried out Technorati for a while, and although I recognize this is a first attempt into this field, I am not sure it is ready for prime time. In terms of searching, I entered some content from experts within their respective field, unfortunately the blog didn't return what I was hoping for. It is certainly possible that I just happened to request the wrong search words, but in this specific instance I was disappointed. Google does a very good job of bubbling content up to the top that most people find valuable and pushes the other stuff towards the end of the search list. I decided to investigate some of the content to see if I could spot why the search results were so different from what I expected. I was surprised by what I found.

In looking at content for top blogs, a number of blog authors were asking people to push their blog to the top by requesting that people add an entry and then do a search on that blog. I think that this explains in part why the returned search results from above were skewed. It is too bad that Technorati doesn't ban/delete this type of behavior as it really diminishes their service. When I saw this, it started to make sense. Anyways, I think that my summary of Technorati is something along the lines of I like the idea of a blog search engine, but unless they figure out how to get the right content on the first try, then Google will likely put them out of business.

Web 2.0 - My Slide Show

The steps to use this site to share pictures are as follows. Decide how you want to upload your slideshow - PDF, PPT or some other options. PPT is very user friendly, so I used this. Ok - now for the recipe

- Take your pictures and place each picture(s) on a slide. Add as many slides as needed as long as you stay under the 30 MB file size limitation. Pictures can be added in terms of cut/copy/paste or inserted.

- Go to www.slideshow.com and log into your free account.

- Now select the option to upload your presentation - wait a fairly long time for the presentation to upload [It's free - what do you expect...]

- Click on 'my slide show' and there you have it.

There is one gotcha that I thought I would point out. Notice in my example the 2nd slide is just a pure gray slide? Well, this is because I didn't have the slide centered in the ppt and only a portion of the slide was taken. The entire picture must be centered. I was hoping that the application would center the picture as this seemed reasonable, but that isn't the case.

What would make www.slideshow.com even better (besides being faster and centering pictures?), well, a bit of user protection for content would be nice. Once pictures are uploaded, anyone on the Internet can look at them. This isn't a huge problem, but if you are taking pictures of your family/kids, you might not want this content available to the general public. It would be nice if they created access control lists or some other structure that controlled access.

Go luck and enjoy!

Web 2.0 SlideShow

Saturday, August 25, 2007

Web 2.0 Flickr

I also looked at Google, but I haven't found quite what I was looking for in terms of ease of implementation. There api documentation is found here. However, I didn't find as many examples on how to use this API and it doesn't look like it support the incorporation into native languages as does Flickr. Maybe someone can point me in the right direction as I could certainly use the help with Picassa and Google. Thanks!

Web 2.0 Corporate Skype

Saturday, August 18, 2007

Robotics - The Need

In reading the following article, the author shows some clear examples of what services a robot could provide. One example was how the household vacuuming is now done by a robot. Here is a link to the robotic vacuum he mentioned. He notes that each night the robot comes out and vacuums up the house. This is clearly a service that many would be interested in, and is available today.

Another example are the Predator unmanned drones used during wartime. These drones have proven to be invaluable to the military as illustrated by their continued use. Other robots include devices used to dismantle bombs which are used by SWAT and the military teams. In both cases above - risk to the human operator is reduced. Other robots could certainly be used in search and rescue operations. Combining robotics with advanced sensors could certainly help save lives in environments where humans might not be able to easily go such as into burning or collapsed buildings, or other hostile environments. These robots could send feedback about the environments and then the human operators would be able to make better decisions. Many of these examples are highlighted in the above article.

The above examples are meant to illustrate the need for robotics. Between the commercial value and the benefit to human lives, many would agree that robotics offers solutions to problems that have yet to be addressed. Given this need, the innovation is sure to follow.

Robotics - Developing the Infrastructor

In order for robotics to flourish, the computational requirements need to be addressed. Robotics leverage AI, which is recognized as heavily computational. However, looking to the future, we see quantum computing just up on the horizon. Also, parallel computing is under active research right now - just look at Best Buy to see the presence of the new Quad-core computers from Intel. Robotics will benefit from the advances in computational computing - who knows - they might turn out to be a major driver.

Grid computing is another interesting concept. If there is not enough computational power within a system, then the system can reach out to the network and ask for network resources. This collection of computing resources is called grid computing, among other names.

However, to reach the grid, the network must be established. To have a network, not only bandwidth is required, but also coverage must be in place. Both of these requirements are already under active research. Look at the proposals around WiMax, Wi-Fi, 802.11n. Distances of both long and short range are addressed, and transfer speeds of data are becoming quite impressive, and will continue to grow.

Grids and city coverage are also being established. Cities like Albuquerque and Portland already have blanket city coverage. Technologies such as the iPhone and Blackberry are starting to leverage the VOIP to make calls, which will continue to drive the proliferation of these networks.

In short, the infrastructure to enable robotics to thrive is either in development or on the horizon. Really, the question is if robotics is going to help drive and accelerate these solutions, or if it is gated by the current technology. My take - it will drive it. The need for robotics is huge - and when there is a need, innovation always prevails.

Robotics - Initial Read

Jim Butler talks about some very interesting aspects of robotics - areas that I had previously not thought about. For example, I was curious about Intel's engagement in this field. As it turns out, robots requires a lot of computing power. This power is for the computations and the associated AI that helps drive a robot. As such, the computational component call out to Intel as this is their expertise. Many other interesting items were covered in the article. Anyways, I'm going to blog on specific areas of this article over the next few days and dissect portions of the article as there are many areas that require further exploration.

Web 2.0 - MySpace - you can keep it...

Friday, August 17, 2007

Web 2.0 - Driving a changing in my behavior - how about you?

Web 2.0 - Communication Tools - Linked-In

LinkedIn (found here) is a great tool that allows personal networks to be formed between individuals. I didn't find this tool, it found me. Let me explain. LinkedIn allows social networks to be built - and people can be added to your network. When I came in one day, I had a number of "LinkedIn" emails waiting for me. I read up on the concept, and I started accepting these "invitations." The power of this system becomes readily apparent as you see your network of business contacts grow. In fact, I even became connected to one of the CTU professors that taught one of our classes through a friend - yup - small world! Anyways, as people tend to move around and change industries, you can keep in touch and develop your network over time.

Another neat item about LinkedIn is that it seems to cross reference with the monster.com job posting. If your network is big enough (which it grows quickly because your friends friends become your friends) you can possibly get an inside contact on the job front. I think that there was a movie about everyone being within 7 degrees of freedom of knowing each other - this system works off of the same concept.

Anyways, the point of this post is that finding the right tool for the right job is very important. If the tool you are using isn't a perfect fit, chances are that there is a tool out there that will work better. GoogleGroups are great for emails, but LinkedIn is better for maintaining a business/social network - IMHO.

Google Groups and Home Page

Wednesday, August 15, 2007

Mind Map

Part of an assignment is to investigate Mind Mapping Tools and Technology. I thought I would do this assignment in Google Docs for fun and then maybe publish to a blog, although I'm not sure yet. Regardless, I thought this would be a good test to see how easy Google Docs handles a longer gathering of information.

I thought I would show off a free tool called FreeMind, which can be found here. This is a great tool and I will include some information about this tool and some screen captures. First, what is this tool you might ask? FreeMind is a tool that is a Java based tool (runs on lots of platforms) and helps capture and share information. That is a broad description, but this tool does a lot! It is not web based, however, it is still a great tool to have in the toolbox. I often use this tool when I am brainstorming different ideas or trying to determine a flow of information. However, it can do much more than that.

Step 1 is to go to the link above and download the program for your given platform. The install is very easy, although the first time you run it might take a second or two to load up. After it is installed, the best way to get started is actually to click on the Help and then the documentation. The documentation that comes up is actually a mind map! By clicking through the navigation/help, the user gets a great feel of how the program works.

Here is a quick capture of a Mind Map - I took this out of the help tutorial, which does demo a lot of the nice capability.

What is nice is that the central idea is captured and then there are branches coming off of this idea. Each branch can have more branches - think of it as a free with a base which has limbs, which has branches, and then twigs and finally leaves. There is no limit to how many branches are present, and you can have as many leafs as needed.

Another nice feature is that the content that goes into a leaf can trigger other activities. For example, one leaf might have a huge section of help on short cut key mappings as shown on the left here.

On the right there are expandable links. Some of these links open web pages while others can link to other mind maps.

Executing other files is also possible (bat, exe's, etc.).

Another great use for Mind Maps and this application are for brain storming sessions. When a problem requires inputs from lots of people, this program can rapidly organize these ideas and facilitate the organization into a meaningful structure.

Anyways, check out this application - its free and very powerful!

Steve Chadwick

Aug 2007.

Success with Google Distribution Lists

Tuesday, August 14, 2007

Passwords and Web 2.0

First, let's talk about passwords. Passwords should be at least 8 characters in length, and more is always better (meeting the next criteria). Passwords should have a combination of upper and lower case, numbers (0..9) and at least one special character (!#$%^&*()_+@) as examples. If your password has all of the above criteria, most would consider it a "strong" password.

Some folks say that you should use a different password for every account. That is certainly the most secure, but pretty tough to remember which password goes with each account . If you go this route, there is a great tool called Password Safe and is found here. This tool is free and has been proven to be very secure. Essentially Password Safe is an encrypted collection of all of your passwords and you have one master password to unlock your safe. The tool has a ton of neat features, including the ability to generate strong passwords.

However, not all folks want the added overhead of using a tool to keep track of all of their passwords. Another option, although not as secure, is to use a two or three tiered password system. This approach divides your accounts into several tiers. For example, your banking, stock/account that involve money is guarded by one strong password. This password is used only with these accounts. Another strong password (different from the first) is used to protect your common account such as email, or less valuable accounts. The advantage here is that your most commonly used password (accessing email, etc.) isn't in use as much a your financial password, and as such, this is more secure that using the same password for every account.

Regardless of the method you choose, make sure to change your password every 90 days (more frequently is better). Also realize that if someone wants into your account, they will probably figure out a way. The key is to make it tough enough that it isn't worth their time. Also, don't rule out social engineering as a way to attack your account. Social engineering refers to the techniques to extract the password from you via some "official" sounding reason. These techniques rely on impersonation or someone often saying that they want to help you, or some other official looking website looking for information (phishing).

Thursday, August 9, 2007

Web Cam Finally Found

Endnote X1 with Word 2007

Friday, August 3, 2007

A Future Prediction: Multi-core Design

First, Intel has demonstrated a working 80-core processor, and a company called ClearSpeed has shown a 96-core. In both cases these cores are on a single chip. Here is the article. The article notes several issues that need to be resolved. First, the chips do not run the x86 instruction set - which is very popular today. Second, how memory interfaces with these chips needs to be resolved. Third, figuring out how to allow developers to leverage these chips in terms of parallel programming needs to be address. The third issue has shown itself as an issue in several other posts...

So, when will the 80-core be mainstream? Intel notes that 80-core should be available in 5 years [article]. Given the complexity of the manufacturing process, my gut feels says that is about right.

So - what does this mean to my prediction? Base on this new data, I think that my gut feel may have been overly aggressive. I would like to change my data based prediction to one of the following. In 10 years we will hit 512 core processors, or in 15 years we may see 1024. The 10 year/512 core also lines up better with the data from MIT that was blogged upon earlier.

Second Life - How to change your password

Steps....

- Go to http://www.SecondLife.com

- Click on support [Top menu item on right hand side of screen]

- Scrolll down this page and look on the left hand side for an option in the section called "My Second Life" and choose "My Account".

- After clicking "My Account" on the right hand side of the screen there will be an option called "Password" which should be selected and the password screen will be presented.

These directions were recorded on 3-Aug-2007. Obviously Second Life could change the menu layout at any given point and these directions would be invalid.

Wednesday, August 1, 2007

A Future Prediction: 512 Bit Computing

I also stated that I thought Apple has a 128-bit system out. I did a bit more checking, and I don't believe that to be the case. I would welcome the correction, but when poking around at the Apple site, it appears to be primarily 64-bit computing. Also, wikipedia confirmed here that there are no mainstream 128 bit systems (Aug 1, 2007). The exception to this noted by Wikipedia is that there are a few super-computers that are a true 128-bit.

In reading through several other sites, it seems that the general feel is that it will take a while for 64-bit architecture to be adopted, and that should take us a ways into the future. However, if this is the case, one has to wonder what Transmeta is working on and how close they are to success.

Does this change my opinion or prediction? Well, I don't think so. Even though this is clearly not mainstream, it is interesting that Transmeta is working in this space currently. Transmeta has delivered unique systems in the past (ultra low power systems) and they should not be underestimated. It seems that there is a reasonable of opportunity for a 512-bit system to emerge.

A Future Prediction: StreamIt, A programming language

The StreamIt home page can be found here. StreamIt is a language that focuses on multi-core systems, processing data streams, and parallel execution. The language aims to reduce complexity of this type of parallel processing and move some portions of this to the compiler.

This is very exciting as MIT is often at the front when driving new technology that is later adapted by industry. If anyone has any experience with SteamIT (the good, bad or ugly) I would love to hear from you. Please post any comments and share your experience.

A Future Prediction: Multi-core and Programming Languages

The first thought was to begin looking around at programming languages and multi-core. So, going to MIT (active in multi-core research) they had a great presentation posted here. Check out slide 12 - this shows a picture that shows a prediction that multi-core will almost hit 512 cores just before 2020. This figure was taken out of Saman Amarasinghe presentation (click the link above). Here is the slide that is very interesting....

The first thought was to begin looking around at programming languages and multi-core. So, going to MIT (active in multi-core research) they had a great presentation posted here. Check out slide 12 - this shows a picture that shows a prediction that multi-core will almost hit 512 cores just before 2020. This figure was taken out of Saman Amarasinghe presentation (click the link above). Here is the slide that is very interesting....This presentation should be reviewed by anyone interested in where the technology is going and Saman Amarasinghe did a great job pulling a solid overview together. His concluding slide peaks to the point where we have an opportunity to really tackle this tough multi-core problem since no existing solution is currently in place. Also, many of the slides hit previous concerns in my original prediction. Having read this presentation, I am confident that we will see a break-through in the new few years that will fundamentally change our approach.

A Future Prediction: A Prediction with No Data

In 10 years, I think that computers will have 1024 cores and parallel computing will they will run at 512bits. All software written will compile down to code that can run in parallel. I also think that todays threading models will be moved into the compiler thereby simplifying the writting of code.

OK - that is my prediction. Here are some of my reasons behind those thoughts. I know that the trend of cores is increasing - we have gone from 1 -> 2 --> 4 and Intel has noted that they have produced an 80 core. I believe that parallel execution tapers off around 16 cores, but I think that barrier will be pushed. I also think that we have seen operating systems move from 16 bit to 32 bit and now 64 bit. I think Apple runs some systems at 128 bit. These transitions seem slower, so I think that maybe in 5 years we might see 256 bit followed by a push for 512 bit. Finally, writing code. I am sure that is going to be vastly different that what we have now. However, I believe our threading model will change. Today's model requires a fairly deep knowledge of how threads work. Also, writing threaded code is very difficult to debug and costly to maintain. I think that a shift of this complexity will go from the source code into the compiler and that the compiler will determine what can run in parallel and what needs to run in serial.

So there it is - in 10 years - let's see what happens.

A Future Prediction: How to approach the problem of prediction

A Future Prediction: Topic Introduction

I am sure that I will be blogging on other topics throughout this series, and as such, I need a convention. I will adapt the following approach. I will label the title of the blog "A Future Prediction" followed by a more descriptive qualifier. I will try to make each blog stand-alone, but that might be more difficult than I suspect. However, after you have read this introduction, you should be able to skip around a bit. Please realize that as you jump around that you are going to get exposed to different angles of this problem.

OK - with that out of the way - let's go have some fun and see what' out there!

Predictions: A Success and a Failure - How do they compare?

I thought that this was interesting and very different than Dr. Halal's approach that says you use the data from various sources and build models to make more accurate predictions. I tend to agree with Halal, but Moore's Law is a great example of how a brilliant person can have insight into the future without using a lot of data.

These two approaches are very different. As stated in our text book - when dealing with economic data, most of the predictive models tend to fail - regardless of complexity.

I am left with the following question: How do we reconcile the insights of brilliant people and also leverage predictive models? What do you think? Please leave a comment as I would to hear your thought - maybe you can help me mold my own.

A Successful Prediction - Moore's Law - Part 3

So - how did Moore's prediction come about? Here is a an article that discusses that question specifically. The highlights are as follows. Essentially Moore has noticed the number of components on a chip where doubling each year, and from there he concluded this trend would continue. He later revised this doubling to every two years. However, Moore notes that he didn't realize the significance of his discovery. In fact, there is a great comment found here that says "Moore says he now sees his law as more beautiful than he had realised. "Moore's Law is a violation of Murphy's Law. Everything gets better and better."[13] "

A Successful Prediction - Moore's Law - Part 2

A Successful Prediction - Moore's Law - Part 1

“The complexity for minimum component costs has increased at a rate of roughly a factor of two per year ... Certainly over the short term this rate can be expected to continue, if not to increase. Over the longer term, the rate of increase is a bit more uncertain, although there is no reason to believe it will not remain nearly constant for at least 10 years. That means by 1975, the number of components per integrated circuit for minimum cost will be 65,000. I believe that such a large circuit can be built on a single wafer.[1] ”

This quote was stated in 1965 and later updated to every 2 years in 1975. In part 2, I will include evidence that supports this statement.

A Failed Prediction: The Computer: Part 5

It's difficult to determine why Olsen believed this. Some believed that Olsen just didn't like the PC and this impacted his judgement. It seems very reasonable that a CEO might use what we call "gut instinct" to lead a company, and that Olsen just missed an opportunity. It also seems reasonable that Olsen didn't see the necessary infrastructure in place to allow the PC to flourish. For example, the PC has capitalized on information sharing via the Internet. Maybe Olsen failed to believe that this complexity of allowing computers to network across the world would be in place. However, it seems clear that any CEO should have a process in place to respond to inputs and try and determine future opportunities to avoid missing the opportunities of large financial gain.

A Failed Prediction: The Computer: Part 4

Two websites are of particular interest when looking into this quote. First, one side disputes this quote saying it was taken out of context and that Ken Olsen was saying that he didn't want a computer controlling his home, while others say that he meant he didn't want a central computer in his home. A defense of Olsen's comments can be found here.

The second group of folks say that Olsen made that comment and he believed it - and that he guiding the company away from the personal PC. Gorden Bell confirmed that Olsen said that quote and believed that Olsen just didn't like the small computer idea. That evidence can be found here.

Olsen was eventually removed from his position of power at Digital having missed the PC trend and the associated opportunities. I couldn't find documentation to see why Olsen believed the PC wouldn't succeed, or why he didn't lend credit to the ideas of his staff. A reference to the staff showed that they did have the vision though - here is a quote from a web page

In this case, it appears that a personal bias against something lead to the missed prediction by the leader of a company. Despite listening to his staff who were seeing the potential of the computer, Olsen missed this opportunity. Regarldess of the different opinions, Digital was in a good position to capitalize in this space, and failed. It would seem that the key learning here is to listen to those you have hired.

Tuesday, July 31, 2007

A Failed Prediction: The Computer: Part 3

Kevin Maney wrote a great online article that sheds some light on these comments. The article can be found here. In this article, many of the computer quotes associated with the failure of the computer are dispelled. Another article that was published by Science and Technology also noted one of Bill Gates famous quotes to be false - it can be found here.

So - it goes without saying, not all things that we read are accurate, which is certainly true with the web. Two web sites above show contradictory evidence exists to common quotes. This underscores the importance of validation.

With this said, some of the quotes do appear to be true. For example, the quote from Ken Olson

• "There is no reason anyone would want a computer in their home."

— Ken Olsen, founder of Digital Equipment, in 1977.

Part 4 of this series will investigate if any insight can be gleamed into why Ken Olsen felt this way. There might not be any specifics available, but it is worth checking out!

A Failed Prediction: The Computer: Part 2

Here are two quotes from this web page

Computers in the future may...perhaps only weigh 1.5 tons.

- Popular Mechanics, 1949.

There is no reason for any individual to have a computer in their home.

- Kenneth Olsen, president and founder of Digital Equipment Corp., 1977.

And of course - going to Wikipedia - there are a number of quotes...

This entire section has been taken directly from Wikipedia.

- "There is no reason anyone would want a computer in their home." -- Ken Olson, president, chairman and founder of Digital Equipment Corp. (DEC), maker of big business mainframe computers, arguing against the PC in 1977. (See [3] for historical context.)

- "I have traveled the length and breadth of this country and talked with the best people, and I can assure you that data processing is a fad that won't last out the year." -- The editor in charge of business books for Prentice Hall, 1957.[1]

- "640K ought to be enough for anybody."[1] or "No one will need more than 637 kilobytes of memory for a personal computer."

Two variants of the same quote, often misattributed to Bill Gates in 1981. Gates has repeatedly denied ever saying this, and he points out that it has never been attributed to him with a proper source. In fact, the memory limitation was due to the hardware architecture of the IBM PC.[2] - "But what... is it good for?" -- IBM executive Robert Lloyd, speaking in 1968 about the microprocessor, the heart of today’s computers.[citation needed]

- "We will never make a 32 bit operating system." -- Bill Gates[3]

The page that the above quotes were taken from can be found here.

I thought that these quotes were interesting - but are they all true? As with all things on the web, they should be validated. See Part 3 for validation...

A Failed Prediction: The Computer: Part 1

Initially, I am thinking about tracking down who believed it would fail, and then I would like to see if I can determine how they came to this conclusion. Stay tuned for more information as it becomes available. My only disclaimer is that I am going to post information as I find it. My goal is to make small digestible stand-alone bite-size blogs. As such, there might be some duplication of information in the attempts to make each post stand alone.

Web 2.0 - Google Docs

Sunday, July 29, 2007

Web 2.0 - iGoogle and Picasa

Anyways, after I took a bunch of pictures of how to perform this task, I had to think how to nicely include them in a blog. Well, I clicked on Picasa, and I found the pictures I stored via Snag-it. I realized I had catpures the images as png - but blogs like jpegs. Well, a quick right-click from Snag-it - and they were all jpegs. I then went into Picasa, and was going to upload to the web. As I was waiting for the upload to complete, I noticed a button called Blogline, so I clicked it. I was shocked when all the pictures were uploaded into this blog, and I all had to do was adjust spacing. The more I use Picasa, the more I am impressed.

Ok - sorry for the districtation, but that key learning was important enough to get some attention.

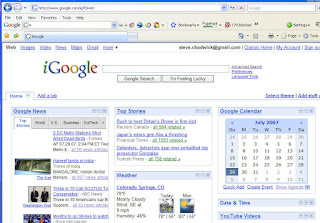

OK - iGoogle.... When I was doing a couple of searches today, I saw a link called iGoogle, and I was curious as to what this link did, and down the rabbit hole I went. The link is on the top right-hand side of the Google main page - I have included the figure below where it shows the specific location.

Click that link and see where you go!

Here is the screen that comes up when you click iGoogle. Notice it has a lot of features - many of which are linked to other Google tools. For example, my calendar came up, along with some news setting that I had previously set.

Here is the screen that comes up when you click iGoogle. Notice it has a lot of features - many of which are linked to other Google tools. For example, my calendar came up, along with some news setting that I had previously set. What is really cool - grab one of the gadgets and move it to where you want it - that's Ajax in action. Anyways, there are a lot of gadgets that can be added and removed as needed.

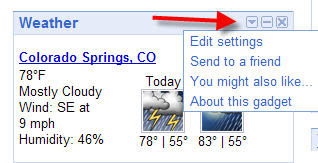

One item of interest was the weather - the default location that came up wasn't of interest. However, by clicking the drop-down menu button - the user gets choices - and we all like choices. See the next figure for specifics.

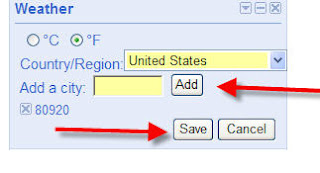

Here are the options that came up - the nice thing for me was that I could customize my region by entering in a zip code or city name. Save the choices and there you go - the weather is customized for your area.

Here are the options that came up - the nice thing for me was that I could customize my region by entering in a zip code or city name. Save the choices and there you go - the weather is customized for your area. To return back to the original Google, simply go back to the top right and click on "classic" and this will return you to the normal Google page.

Enjoy!

SL and proxy servers - is there a solution?

Thursday, July 26, 2007

Web 2.0 Google Picasa

Flickr - initial impressions

I then signed up to add family members, and that interface wasn't designed by anyone who knows about GUI's. That is an issue because this page is likely used frequently. All fields are required, so why they would use a grayed out field as shown below cost me about 5 minute of looking. Of course, the error that was given didn't take me to the point of origin - the text field. It takes 1 line of JavaScript to place the cursor in the offending location - and 1 more line to turn the label red. It baffles me why they have missed out on this usability opportunity. I have included a photo below to show the issue.

Finally, I have to say that to find help or a page that highlights all the features has continued to remain hidden. It might be there - but its clearly not in some location that I am looking. Maybe I need to go back through and start looking at the grayed out items. However, I always like to find that single page that walks through how to use the common features of a page.

Finally, I have to say that to find help or a page that highlights all the features has continued to remain hidden. It might be there - but its clearly not in some location that I am looking. Maybe I need to go back through and start looking at the grayed out items. However, I always like to find that single page that walks through how to use the common features of a page. Inital impressions of Flickr - the concept is an A - the implemenation - a C - overall score B-.

Flickr - let's check that out

Tuesday, July 24, 2007

Web 2.0 Google Talk - Update

Monday, July 23, 2007

Web 2.0 Google Talk

Well, today, I found something called GoogleTalk - http://www.google.com/talk/ and have installed this on my computer. I have also sent out invitations to a number of people that I used to communicate with, and am currently waiting to see if they respond. I am hopeful that this tool returns functionality that I once had. I will post an update, but this looks like a cool tool and might help me stay in touch with friends and vendors.

Attacks without controllling a PC - Link

I read a great article that discusses how Denial of Service attacks are now being launched from networks without controlling a user's computer. With the number of security folks in our class, I thought I would include the link here in case someone else was interested. http://news.bbc.co.uk/2/hi/technology/6908064.stm

Sunday, July 22, 2007

Charting in .NET

I first tried Dundas. I found their object model to be very easy to use - without a doubt - it is very straight forward. I also like how they data-bind objects - very intuitive. The software has a nice interface and produces very nice graphs. Currently, this is my software of choice for graphing. However, I wish Dundas would make one improvement - and that is on their rendoring. Read below, because when it comes to rendering, ChartFX has some very nice features.

ChartFX does an awesome job rendering graphs. The mouse-over features are remarkable. Specifically, when you hover over a series, the other series disappear allowing the user to clearly see trends. Also, the datapoints are labeled with series name, x, and y coordinates. All of this comes for free - and is very responsive. However, CharFX has two issues - the databinding object model is very weak when it comes to databases - eps. if the user wants a cross-tab chart. I also encountered a number of miscellanous bugs in the software - the a ability to determine the visible chart area seems to be an issue, and when trying to upgrade the dll's based on the built in mechanism, that seems to have issues as well.

In summary, I would offer these thoughts on the strengths of each vendor

Dundas -

- Great object model - easy to use

- Exceptional databinding

- Seems very stable, few software bugs

- Great tech support

ChartFX

- Best chart rendering for features (mouse-overs, x/y coordinates, etc.)

- Interface presented to the user during run-time is more polished.

Saturday, July 21, 2007

Second Life does perform on Gateway Tablets

Another configuration item was turning off the fog. Again - this made it a bit crisper.

Thanks Cyn for the help!

scc

Monday, July 9, 2007

Second Life Issues

Second Life just doesn't run on the Gateway Tablet PCs. I have given up in frustration. I have tried new video drivers, defraging the hard-drive, rebuilding the system, closing down other apps, etc. In the end, when I do get it to run, it is choppy at best. When I am done with the app my PC tanks within an hour or so, which of course forces a hard reboot. I have seen Second Life run on more powerful machines, and it is very nice. however, trying to use the Gateway tablet is a mistake. Maybe my rant should be about Gateway Tablets and not Second Life....